Last week LangChain team made LangSmith – a tool that adds tracing and observability to the LangChain ecosystem – generally available. For the first time, I got a chance to sit down and get hands on with the LangChain ecosystem. Here’s what I found.

Right now, the world of artificial intelligence is a complex and intimidating one, filled with jargon and technical concepts, and extremely fast paced. It’s already old news that language models have taken over the industry the whole world. LangChain, at its core, is designed to bridge the gap between the theoretical capabilities of language models and their practical application in solving real-world problems. LangChain is a framework for developing applications powered by language models that are context-aware and are able to reason. With LangChain ecosystem it’s exceedingly easy to build applications that take advantage of language models.

The Ecosystem

A picture is worth more than a thousand words. Here’s how LangChain ecosystem looks:

LangChain ecosystem provides 4 main tools:

LangChain

A chain of components that make building an application using language models possible. LangChain’s core strength lies in its use of “chains,” which are essentially sequences of prompts, models, and output parsers that work together to achieve a specific goal. Whether you want to build a chatbot that can hold conversations, a system that can answer your questions in an informative way, or agents responsible for specific tasks, LangChain provides the building blocks you need.

It’s the core of the ecosystem. It provides access to different Models (chat models, LLMs, and output parsers), Retrieval tools for Retrieval Augmented Generation (RAG) process (Document loaders – including web browsing APIs, text processing and splitting, and Vector stores), and Indexing tools, and finally Agents (a language model as a reasoning engine to determine which actions to take and in which order). Checkout all the integrations on their website.

LangServe

LangServe helps developers deploy LangChain runnables and chains as a REST API (as shown in the picture)

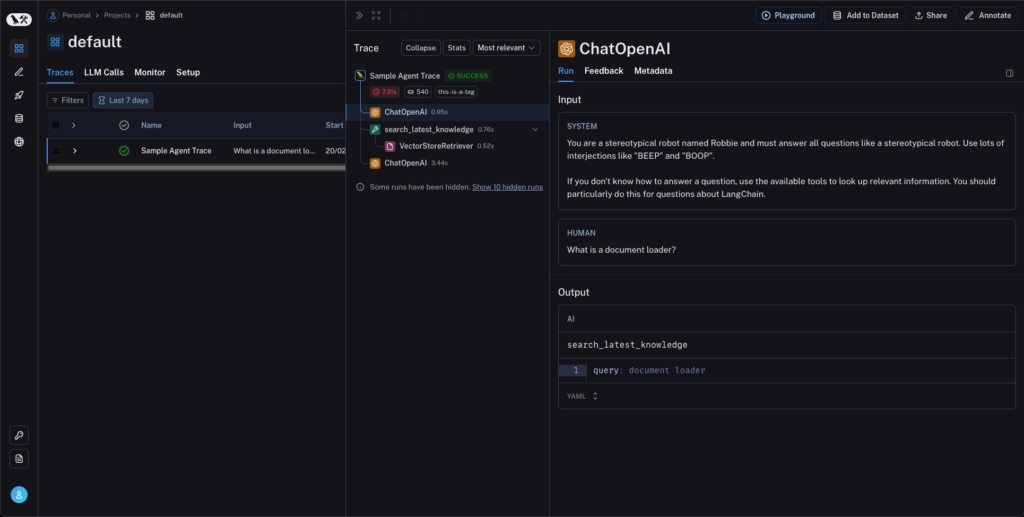

LangSmith

LangSmith helps you trace and evaluate your language model applications and agents. (as shown in the picture).

LangGraph

LangGraph is a library that helps you to coordinate multiple chains (or actors). The main use is for adding cycles to your LLM application

Conclusion

If you’re looking to dip your toes into the world of language models, LangChain is a great starting point. The whole ecosystem is designed to help you get your ideas from idea to production in very few lines of code. With LangChain ecosystem its extremely easy to build applications that take advantage of language models. Head to LangChain documentation or cookbook – if you know your way around Jupyter notebooks – to learn more.